This overview is not meant to be comprehensive, but aims to address a few units and measures that have been misused by speakers at conferences and by others in the industry. In general, SI units are the preferred dimensions of all official units but lighting, like a few other industries in North America, still hangs onto a few traditional units.

Lumens per watt - luminous efficacy

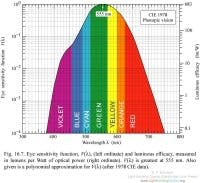

The output of an LED is obviously light and heat, but how much light does it produce and how much electrical power does it take to generate that light? Measuring the amount of light can take two forms: radiometric and photometric. A radiometric measurement gives the true optical power as determined by total energy across the spectrum of the light source. But the human eye does not respond to all wavelengths equally and has a particular distribution within the sliver of the electromagnetic spectrum we term visible light. This distribution is in the general form of a bell-shaped curve and is called the spectral luminous efficiency function, denoted V() - see diagram.

On the other hand, luminous efficacy has units of lumen/watt. According to CIE, luminous efficacy of a source (often called simply “luminous efficacy” or “source efficacy”) is the ratio of output luminous flux to input electrical power. However, luminous efficacy of radiation is the ratio of luminous flux to radiant power, which is a theoretical maximum that a light source with a given spectral distribution can achieve with a 100% radiant efficiency.

Another, often overlooked, aspect of luminous efficacy is the issue of light sources and useful lumens. A light source may deliver many lumens per watt, but that source needs to be housed, connected and usually shaded in some way to prevent glare. Glare, an undesirable side effect of lighting, often results from direct viewing of a light source. In this case, there might be many lumens generated, but if a point source is too bright this can cause eye fatigue and so the use of a light shade or other mechanism to direct or shield the light source will be useful. LEDs can deliver many useful lumens and may not require the additional infrastructure of a shade and thus an LED fixture, in some cases, can provide more useful lumens than the lamp specifications suggest.

Efficacy and performance

Efficacy improvements are often said to be following the same trends as performance improvement in LEDs, where performance is the percentage increase of radiant efficiency over a given time period, However, and this should be obvious, LED luminous efficacies must level off because they cannot double repeatedly without end. If the luminous efficacy of a white LED package is at 50 lm/W, then doubling the efficacy more than twice puts it near theoretical limits for a broadband light source. It will be difficult to go above 100% efficacy for a white light source unless you don’t mind a greenish light!

When radiant efficiency increases, luminous efficacy of a source also increases proportionally. Obviously radiant efficiency cannot exceed 100%, and so the luminous efficacy cannot exceed the theoretical maximum, which is determined solely by the spectrum of the source. The theoretical maximum for any light spectra is 683 lm/W (for 555 nm monochromatic radiation). For white light, the maximum is typically 300 to 350 lm/W (for a traditional fluorescent lamp spectrum).

Yoshi Ohno’s work at NIST has shown that it is theoretically possible for a RGB white LED to have a maximum of over 400 lm/W, which means that source efficacy of 200 lm/W can be achieved with 50% LED radiant efficiency. This is the rationale for the US Department of Energy’s solid-state lighting goal of 200 lm/W.

Color quality and CRI

A high lumens-per-watt value does not necessarily mean that the quality of the light is also good. The notion of color quality can be a subjective measure, but nonetheless many suggestions for methods for calculating and measuring the quality of a light source have been made. The Color Rendering Index (CRI) is a measure that has been widely adopted and used by the lighting industry to characterize the quality of a light source. It is controversial however, and others have noted several deficiencies, especially with respect to LED sources.

CRI is a specification to assist designers in making comparison between different lamp sources and is a relative comparison between a lamp source and a reference source. Light sources are compared to a reference with the same color temperature (see below), and a score based on the color shift of a palette of base colors is used to determine the CRI value. Additional samples including some saturated colors have been added for a total of 14 but do not fall into the general calculation for CRI.

CRI is typically used only to compare sources of the same type and not to compare sources of dissimilar color temperatures. CRI is supposed to work when comparing sources at different CCT, though, of course, it is arguable whether or not a light source at 2800K with a CRI=100 has the same color rendering performance as a light source at 6500K which also has a CRI=100. This is one of the deficiencies of CRI, but generally, CRI values can be compared for sources at different CCT within a reasonable range.

The CRI calculation is the difference between a set of color samples illuminated by the light source under test and the reference source. The group of samples is then averaged and a score with a maximum of 100 is calculated. 100 indicates the best match between illuminants. For very poor sources, the CRI can even be negative!

Because the CRI score is directly related to the spectral power distribution of the light source, it is possible to manipulate the spectrum to your advantage and produce a higher CRI value. Fluorescent lamp manufacturers have been known to 'game' the CRI metric by shifting the emission points within their lamp spectra. This can shift CRI by several points. In general though, differences of 5 points in the CRI value do not matter.

A common misunderstanding is that high CRI means that the light source will render all colors well. But this is not always the case since CRI is measured only with respect to a reference source. The reference source is either the blackbody curve (for test source CCT< 5000K) or a CIE Daylight source (for test source CCT> 5000K) that has the same CCT as the test source. However, the color rendering of sources at extremely low or high CCT can be very poor even though the CRI score is nearly 100.

In general, CRI values higher than 80 are considered good for indoor lighting, and higher than 90 are good for visual inspection tasks such as those required in printing or the textile industry.

Dollars per lumen

Measure the total number of lumens of a light source and divide that into the cost for that light source. This gives a metric that is a measure of how much light, or flux, you get per economic unit. This has decreased sufficiently over time that the metric is often expressed as pennies per lumen in the US or dollars per kilo-lumen. Perhaps someday it will be dollars per mega-lumen, but until then it is a simple measure of upfront cost of a source.

Lumen-hours

This is the photometric equivalent of the watt-hour (or kW-hour), with both being the product of power and time. Since power is energy/time, the lumen-hour measures energy. Due to the large quantities involved is often expressed in million lumen-hours or even mega lumen-hours.

Dollars per million lumen-hours

While a seemingly an odd metric, this has parallels to the electric bill you pay each month for your home or business. You pay for energy (not power) consumed each month and it is expressed in your dollars/kilowatt hour ($/kWh) rate.

Here's an example: If you have a lamp that lasts for 1000 hours and provides 1000 lumens you will have, over the life of the lamp, a total energy output of 1 million lumen-hours. If that lamp had an initial cost of $1, then the cost of that light would be $1/million-lumen-hours. This is a simplistic formulation of the Cost of Light, a way to calculate the cost of a light source over time that incorporates lifetime, power consumption and initial cost.

True cost of light But the true Cost of Light involves several other factors such as the cost of energy, replacement cost of the lamp. The equation below relates all of these factors into a more complete cost of light measure.By inspection, you can also see that notions of luminous efficacy or cost per lumen are built into this formulation as well. There are several interesting aspects to this measure including the sensitivity to lifetime or even lumen output. With lifetime in the denominator of part of the sum, this formulation suggests that there will be diminishing returns as lifetime increases. Lamp and labor costs are less meaningful as lifetime increases appreciably. Evaluations today suggest that based on current costs, lifetimes above 60-70K hours result in diminishing returns on a Cost of Light basis.

Similarly, an asymptote occurs as lumen output also increases. Costs will certainly decrease as lumen output rises, but diminishing returns come into play. Again, several ‘what-if’ scenarios suggest that lumen outputs above 1-2K lumens for a lamp/fixture source will result in little change in the overall Cost of Light.

Color temperature

Color temperature by itself isn't a metric of performance. It's typically a specification of the type of light source, and is used to describe the color of white light.

Color temperature is a generally accepted means of describing the color of white light. The term ‘temperature’ refers to a real temperature of a physics concept called a ‘black body’. A black body absorbs all light and energy that impacts it, but conversely, according to thermodynamics, is also a perfect emitter. The close day-to-day equivalent of this can be seen in such materials as iron, which, when heated, gradually glows hot enough to see. The colors change as a function of temperature; first red, then orange, then yellow up through white and blue. The temperature of the material corresponding to those colors is termed the color temperature.

Color temperatures are measured on an absolute temperature scale, in degrees Kelvin, where the Kelvin temperature is the same as the Celsius value plus 273 degrees. Thus, room temperature is about 22C or 295K. The colors corresponding to particular temperatures are shown in the curve within the CIE diagram below.

Correlated color temperature (CCT)

Correlated color temperature refers to the closest point on the black body curve to a particular color as defined by its chromaticity value. That is, the x, y value on the CIE Chromaticity diagram. The reference sources are color temperatures that fall on the black body curve. How far off the curve can a CCT be? According to the CIE, chromaticity values that are further than 0.05 from the black body curve are simply colors and not a CCT.

Summary

These are just a few metrics for lighting that come up often in discussions of light sources and LEDs as light sources. This is by no means complete and you should consult the IESNA Handbook and other books on color and photometry for more in-depth discussions on these topics.

Acknowledgements

Thanks to Yoshi Ohno at NIST and Fred Schubert at RPI for their comments.